We want to bring this topic to SXSW 2026, with our neuroscientist friend Sahar Yousef, and we need your votes to get there. Just give the heart a click.

The world loves AI. 1 billion people are already using ChatGPT – about 12% of humanity. And it happened in just two years. This is a crazy fast level of adoption, and it validates the Silicon Valley playbook: Make it great, make it cheap, get us addicted, then figure out how to make billions – or trillions.

We love AI because it offers what we’re always looking for: cognitive shortcuts (see: the entirety of human history, from books to calculators to Netflix). We’ve always done this, but AI is a whole new scale. We’re not outsourcing discrete cognitive tasks like addition or choosing a movie. We’re outsourcing thought across vast swaths of human knowledge – and it’s making us dumber.

This will not end well for most of us. We’ll let AI take over a few tasks, and soon find it’s doing all of them. We’ll lose our minds, our jobs, and our opportunities.

But it doesn’t have to happen this way. Here’s how to see the path ahead – and take a different one.

The beginning of the end

In March 2023, I used ChatGPT for the first time, and I (and a hundred million other people) quickly realized it would be my next big outsourcing tool. Forget having to type “vacation recommendations for Sicily” into Google to start a multi-hour research project – now I could outsource my entire vacation plan to AI.

At this point, I use ChatGPT or Claude every day. I consult it more frequently than my COO, my board, my wife, or my doctor. I’m not using AI to generate emails – I’m using it to think.

I even added an “AI monitor” at the start of this year to make it easy to turn and start working with my AIs. And in the car, I’ve ditched podcasts in favor of talking to AI, usually via ChatGPT voice mode.

At this point, every medium-to-high stakes decision in my life merits a conversation with AI. Prep for a board meeting? Ask AI to play the role of a board member. Finding ways to slow the progression of my prostate cancer? Ask AI to play the role of a medical researcher. Struggling to manage all my priorities and projects? Ask AI to play the role of an executive coach (and I live with one).

AI has made my high-effort brainwork faster and more productive.

But I am also getting cognitively lazy.

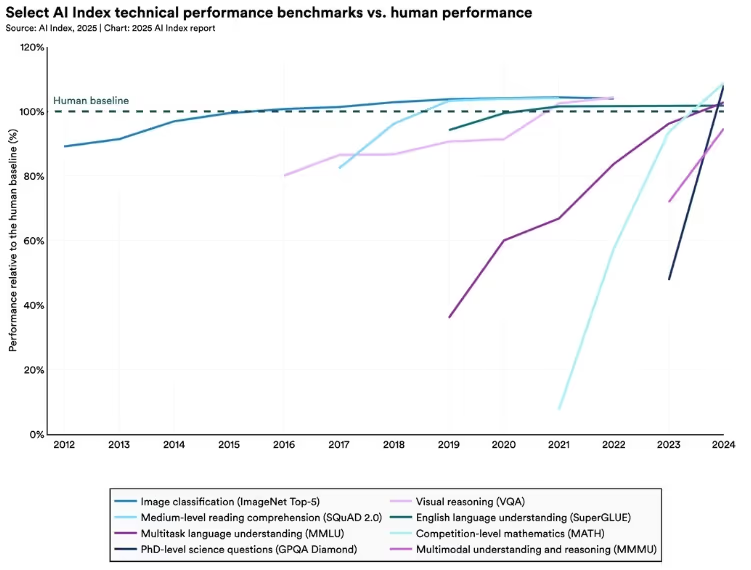

In the last six months, generative AI performance has gotten a lot better – not just against even the most demanding benchmarks, but also in the real world. GPT-5 may not have introduced AGI, but it performs incrementally better across a number of benchmarks – including reasoning.

I used to have to check AI’s drafts thoroughly to catch hallucinations and correct errors. But now, it gives me a pretty good first draft 90% of the time, in a very “human” tone … and frankly, I’m losing the motivation to check its work.

Two years ago, I thought the workforce would divide into “those who don’t use AI” and “those who do.” Now I see that’s wrong. In five years, everyone will use AI. The real divide will be between those who conduct their AIs to do better work – and those who outsource their thinking to it.

This divide will impact career progression, compensation, and access to opportunity. I love Mo Gawdat’s suggestion that using AI is like “borrowing 40-50 points of IQ” – but that’s only true if you’re still doing some of the thinking for yourself.

How outsourcing degrades our thinking

Humans have always offloaded cognitive work. Before books, trained bards memorized Homer's entire Iliad (15,000 lines of text). Now technology is an extension of our brains, enabling us to offload basic math, navigation, or notetaking in a meeting.

On the whole, these use cases are relatively narrow and not life-changing. If I can’t make sense of a map anymore (as that part of my brain has atrophied from using GPS), what's the big deal? I don’t plan to go hunt for dinner anytime soon.

AI is different. It can handle almost any cognitive task – writing, research, coding, problem-solving, etc.

And because it handles “knowledge tasks,” using AI feels productive. When you're looking at TikTok, you know you're wasting time. When you use Claude or ChatGPT, you feel like you're becoming smarter and more efficient. There's no "brain rot" alarm going off like there is with social media. Your CEO is even telling you that you have to use it (not the case with scrolling Instagram).

In a recent piece for the WSJ, tech reporter Sam Schechner (an American living in Paris) said he started using ChatGPT to draft emails in French. It felt like a net positive – enabling better communication with his French friends – until he started to feel his brain “get a little rusty.” He found himself grasping for the right words to text a friend.

This is how AI outsourcing begins: innocently, and gradually. You ask AI to draft an email. It does a good job and saves you 10 minutes. Next you ask it to outline a presentation. It nails it.

So you start using it for more complex tasks like “draft our Q3 strategy,” “write the board deck,” etc. You start depending on AI to do the work, and you stop checking it as thoroughly. And slowly, your skills atrophy.

The evidence is already here. Earlier this year, Microsoft and Carnegie Mellon released a much-discussed paper showing that gen AI can reduce our ability to exercise critical thinking. When knowledge workers are more confident in AI’s output, they’re less likely to use their own brain. (Even when reading the Microsoft paper, I thought, “Ugh, it’s long – Claude, just tell me what it says”.)

People who trust AI (like me) rely on themselves to be its fact-checker. But there are two problems with that: First, we overestimate our ability to identify AI’s mistakes.

And second, the temptation to bypass the fact-checking step is going to get stronger, the more we get used to not doing the work. Research from UGA shows that the harder a task is, the more likely people are to default to a machine-generated suggestion. It’s how you get high school papers that say “as a large language model …” or Google releasing an AI ad with an obvious error.

Right now this is only a burgeoning problem – because just 8% of workers use AI every day. But what happens when that number hits 40% or 70%? The AI crutch becomes the spine, and eventually we can’t stand on our own.

Drivers vs. passengers

In the next 10 years, AI will be easily accessible in every type of knowledge work. And the knowledge workforce will divide into two groups: AI drivers vs. passengers.

AI passengers will happily delegate their cognitive work to AI. They’ll paste a basic prompt into ChatGPT, copy the result, and submit it as their own. They won’t fact check. They won’t push the AI to verify its sources or consider decisions from multiple angles.

In the short term, these people will look more productive and likely be rewarded for doing faster, better work. But that advantage is temporary. Everyone gets the same AI superpower, so if you take the V1 from AI as your finished output, then your work will be undifferentiated.

And as AI improves and operates with less human oversight, passengers will be judged as surplus for adding nothing to AI's output. And even worse, they'll gradually lose their ability to produce original thought.

AI drivers will insist on directing AI. They’ll do some original thinking themselves, use AI to pressure-test their assumptions, and rigorously check what it says. They won't accept AI-generated results they can't validate. They’ll look to AI for a conversation – not the answer.

In the short term, these people might be encouraged to use AI more. Short-sighted managers will say, “Just have AI do it” – or praise people who get to the fastest result.

But long term, the economic divide between these groups will widen dramatically. AI drivers will claim a disproportionate share of wealth in the next decade, while passengers face a false security. They're using cutting-edge technology but becoming increasingly replaceable, all while believing they're on the right side of the AI revolution.

How to be an AI driver

At this point there seems to be no offramp from AI – so refusing to use it at all it isn’t the answer. AI will make us smarter, faster, and more productive, and we need to use it to at least stay in the game.

And we can’t stop it anyway. Corporations are richly incentivized to implement AI. Resisting it would be like telling your boss, “Don’t hire a cheap intern – I want to write all the YouTube transcripts myself.”

But you can make yourself AI’s boss. If your boss stopped giving you direction and checking your work, they'd probably be fired, and you'd be promoted. So don't be the lazy boss of your AI.

Instead:

- Start with what you know. Don’t go straight to asking AI for tasks that you can’t validate. Use it in areas where you have pre-existing expertise, so you can be critical of its output.

- Have a conversation instead of asking for the answer. Don’t ask AI, “What should we do with our marketing budget?” (It won’t give you a good answer). Give AI constraints, inputs, and options, and debate the options with it.

- Be hyper-vigilant. When you work with AI, be an active participant. Scrutinize what it gives you. Ask yourself if you agree or disagree. Don’t assume the output is good enough because it looks good enough – challenge yourself to ask, “Is this a good recommendation? Would I stand behind this?”

- Practice active skepticism. Constantly probe AI with your point of view. Ask questions as you would with a colleague. “Isn’t that downplaying the risk of this venture?” “Do you really think that conversion metric is doable in the first month?”

- Resist outsourcing every first draft. The blank page is scary – it's also crucial for activating your brain. Start some projects without AI assistance.

- Make the final call, and own it. AI should assist with every medium-to-high stakes decision you make, but it doesn’t make the final call. Own your decisions as a human – it’s your job / raise / promotion / reputation on the line.

Your mind is a terrible thing to waste

Working with AI the right way is like borrowing 40 points of IQ – pretty much a no-brainer for anyone who wants to get ahead in business. Finally, you have a thought partner who’s available 24/7, has endless patience for your questions, and has “expertise” on any topic you want to discuss.

But you’re also at a crossroads. You’re going to see many friends and colleagues opt out of “active thinking” and outsource their decision-making to AI. Many of them won’t even realize their cognitive skills have atrophied until it happens. And by then – as with our addictions to our phones – it’ll be hard to go back.

Don’t be this person. Use AI to challenge and strengthen your thinking, not replace it.

The question isn’t, “will you use AI?” The question is, “what kind of AI user do you want to be: driver or passenger?”

We want to bring this topic to SXSW 2026, with our neuroscientist friend Sahar Yousef, and we need your votes to get there. Just give the heart a click.