The model that Sam Altman likened to the Manhattan Project and said left him feeling “useless” is here.

But the average leader isn’t looking for nuke-level power. They’re looking for productivity gains, better outputs, and solutions to the friction points AI itself creates (e.g. hallucinations).

Our high-level take: GPT-5 does a lot of things better than its predecessor, and it certainly has the potential to expand OpenAI’s reach and drive down churn. But the majority of people aren’t sliding off AI because of minor differences in model capabilities … they’re sliding off because they don’t know what to use AI for, and they feel threatened by it. So keep an eye on the model releases, but prioritize what matters most: revealing great use cases, building AI into workflows, and helping your peers understand its potential.

But first, how we got here

ChatGPT-5 is the 7th model release from OpenAI this year, and the 4th reasoning model release.

The first of the year was GPT‑4.5 (“Orion”) in February, marking a major step forward in conversational fluency, contextual awareness, and reliability – but it wasn’t designed for deep reasoning.

By April, Altman was hinting at unifying the “o-series” (aka the reasoning models) with the main GPT line to eliminate model-switching altogether and combine the capabilities of these various models into one. A singular model that was thoughtful, smart, and low in hallucinations.

On August 7, GPT‑5 was released. OpenAI is calling it their “smartest, fastest, most useful model yet” – specifically framing it as a major milestone on the path to AGI – and it’s now available to all ChatGPT users, even the free ones (with some limitations).

What sets ChatGPT-5 apart from the others

If you have model release whiplash, allow us to break down why this is any different from the other 6 you’ve gotten this year.

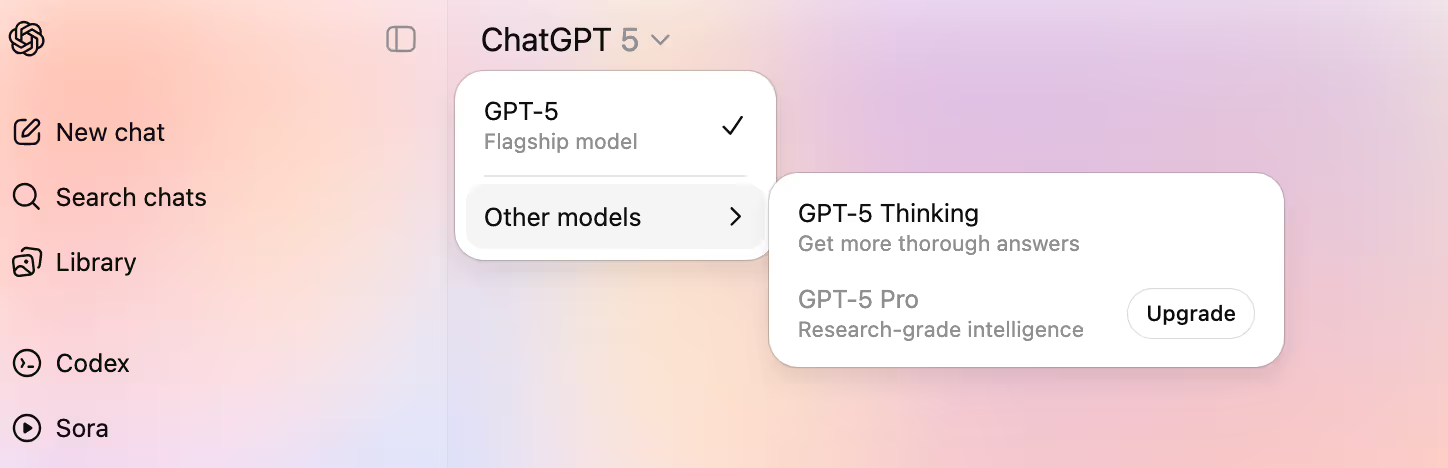

1. You don’t need to choose a model for every use case

In the past, OpenAI users had to choose different models for different tasks – GPT-4o for general chatting, GPT 4.5 for writing and brainstorming, GPT o3 for complex problem-solving, etc.

But GPT-5 automatically figures out what kind of task you're doing and routes it to one of its new, specific expert “personas”:

- Want to write a poem? GPT‑5 will likely engage its language generation and creative writing modules.

- Need to solve a math problem? GPT‑5 will route you to a symbolic reasoning or mathematical logic expert.

- Just want to chat? GPT‑5 activates its conversational module – designed for natural, human-like flow.

TL;DR: You don’t have to know the right model to get the best results for your use case anymore – GPT-5 will figure it out for you. In our opinion, this is THE most impactful part of this release for most knowledge workers, and will likely help OpenAI drive down the churn (and potentially the token costs) of people who weren’t getting good results because they weren’t using the right models or were using pricier models for the wrong things. But this is essentially OpenAI correcting a problem they created – which we shouldn’t be that impressed by.

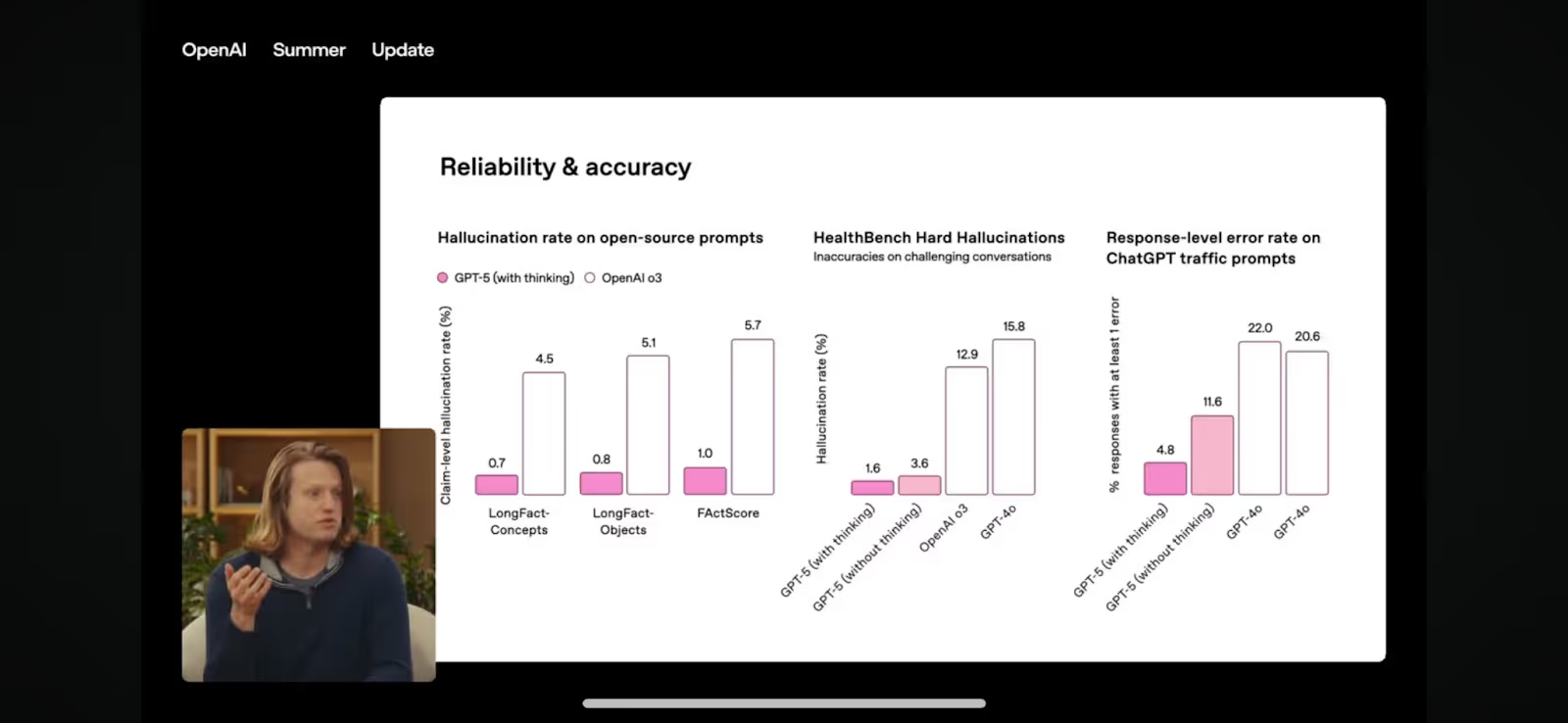

2. It’s trained to hallucinate less and admit when it’s stumped

GPT‑5 was specifically trained to say “I don’t know” when it’s uncertain of an answer. This is a significant improvement from the sycophancy of other models like GPT-4o – whose releases were actually a step back in terms of hallucination rates.

It’s also designed to provide more sources with its responses and intelligently determine the right level of explanation for your question.

These updates make GPT-5 more accurate and less likely to make errors in the middle of a reasoning chain. It scored much higher in accuracy on benchmark tests in math, advanced math, software engineering, and grad-level questions.

TL;DR: While it’s still essential to check AI outputs for accuracy, you no longer have to sacrifice accuracy for exceptional reasoning power – which was a significant problem in previous thinking models. That said, hallucinations are still possible (and other reviewers have pointed out they do happen) - so this is less a massive step up as much as it is a correction for previous versions.

3. You can give it way more inputs

GPT‑5 is now OpenAI’s most advanced multimodal model. Building upon 4o’s capabilities, it has:

1. A much bigger context window. GPT-5 can process entire books, long videos, or complex documents as input and supports up to 400,000 tokens (compared to 128k in GPT‑4o), and it can do so without losing the thread of the conversation. This puts it in second place to Gemini, which has the largest context window of the major LLMs at 1 million tokens.

2. Task routing for inputs. This model can determine which part of itself should process multimodal inputs. E.g. You upload a chart → vision system extracts it → reasoning system interprets it → math module explains it.

3. Combining deep reasoning with multimodality. Previous ChatGPT models could see, but GPT‑5 can think about what it sees or hears with step-by-step logic.

4. New ways to pull in context. You can speak to ChatGPT-5, show it your calendar, ask it to write emails, or even feed it a video of your whiteboard notes.

TL;DR: This changes how you should think about prompting. With the addition of reasoning in multimodality, the emphasis is now on giving ChatGPT the best possible uploaded context – aka background docs, data, etc. – versus directing it through written prompt.

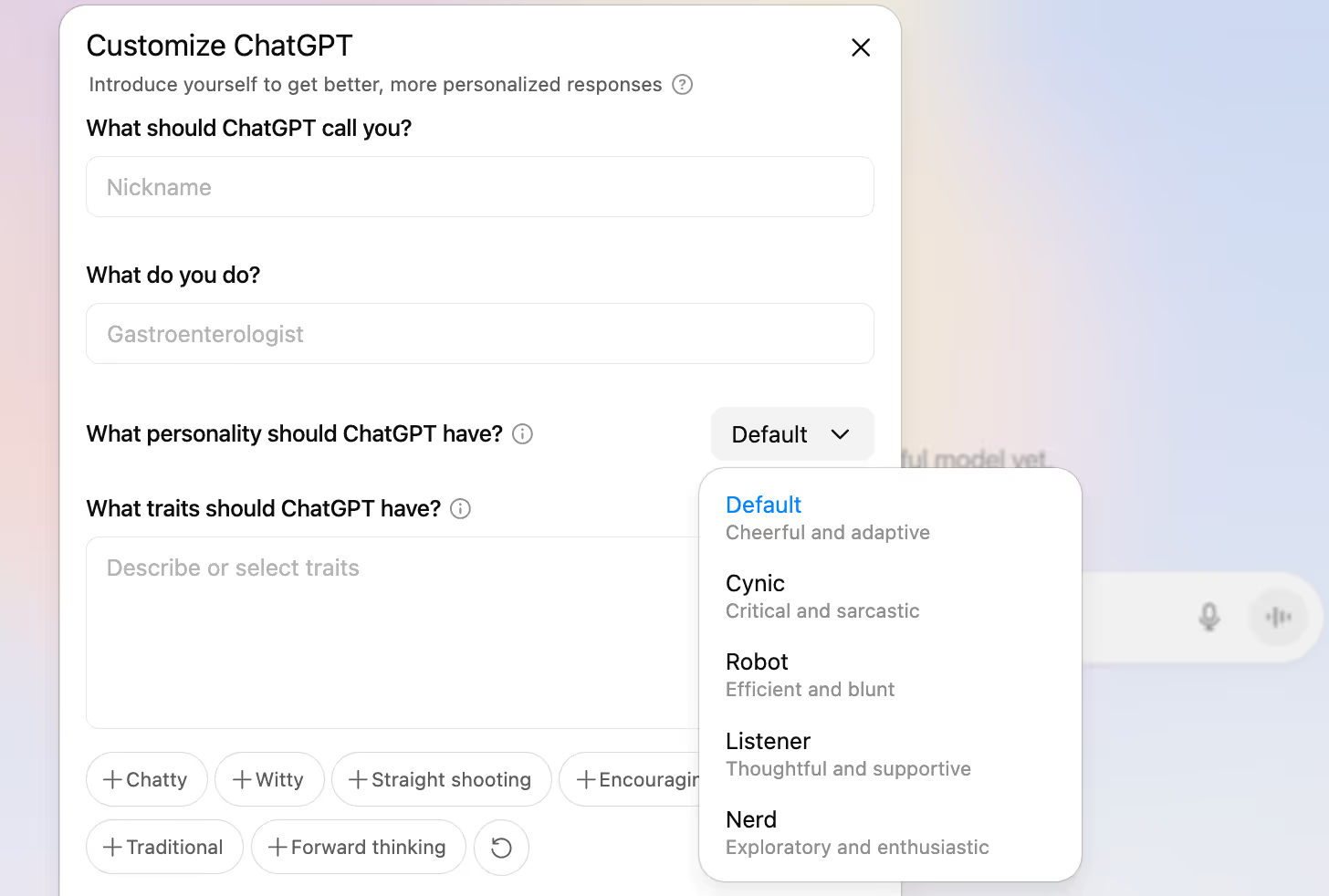

4. It has built-in personalities

GPT‑5 can now talk to you in different “vibes” – aka preset ways of changing the tone, style, and emotional approach of the AI without changing its underlying intelligence. For example:

- The Listener: Supportive and calm

- The Nerd: Detail-oriented and curious

- The Robot: Neutral and precise

- The Cynic: Dry humor and skeptical

This removes the need to manually describe the tone of your desired output with every single prompt. It can adapt to drafting a serious email or a heartfelt wedding toast.

And beyond that, GPT-5’s vibe system is a first step toward true conversational adaptability – which makes the AI easier to collaborate with over time.

TL;DR: This is probably a sleeper feature. It seems like a gimmick (and maybe it is, time will tell), but it might actually lead to a lot less prompt engineering to get to a usable output. This is especially great news for content creators. Plus, varied personas were one of Grok’s main differentiators – so this is one of many examples of OpenAI’s attempt to close the gap on competitor advantages.

5. It’s finally catching up to Claude’s coding abilities

GPT-5 claims to be the best coding model out there – something Claude also claims. And while Claude is unlikely to be immediately unseated by ChatGPT’s first production-grade coding model, GPT-5 has finally added some features you used to have to go to Claude for:

- Execution, not just ideation. GPT-5 can now generate a front-end prototype from a natural language prompt.

- More bandwidth. Due to GPT-5’s larger context window, it can now read an entire codebase, keep track of complex dependencies, and review large pull requests at once – aka less breaking down tasks into bite sized prompts.

- More thorough de-bugging. GPT-5 leverages its new reasoning modules to find a bug, understand why it happened, and suggest a fix for it.

- More confidence in multi-step instructions. ChatGPT’s reasoning models have had issues in the past with following long prompts and consistently adhering to step by step instructions. GPT-5 claims that because it divides the labor among its various personas, this is less of an issue.

And because it’s been trained to hallucinate less, it’s more likely to say something like “I’m not certain this function exists in version X – shall I check the documentation?” instead of inventing functions and calls that don’t exist.

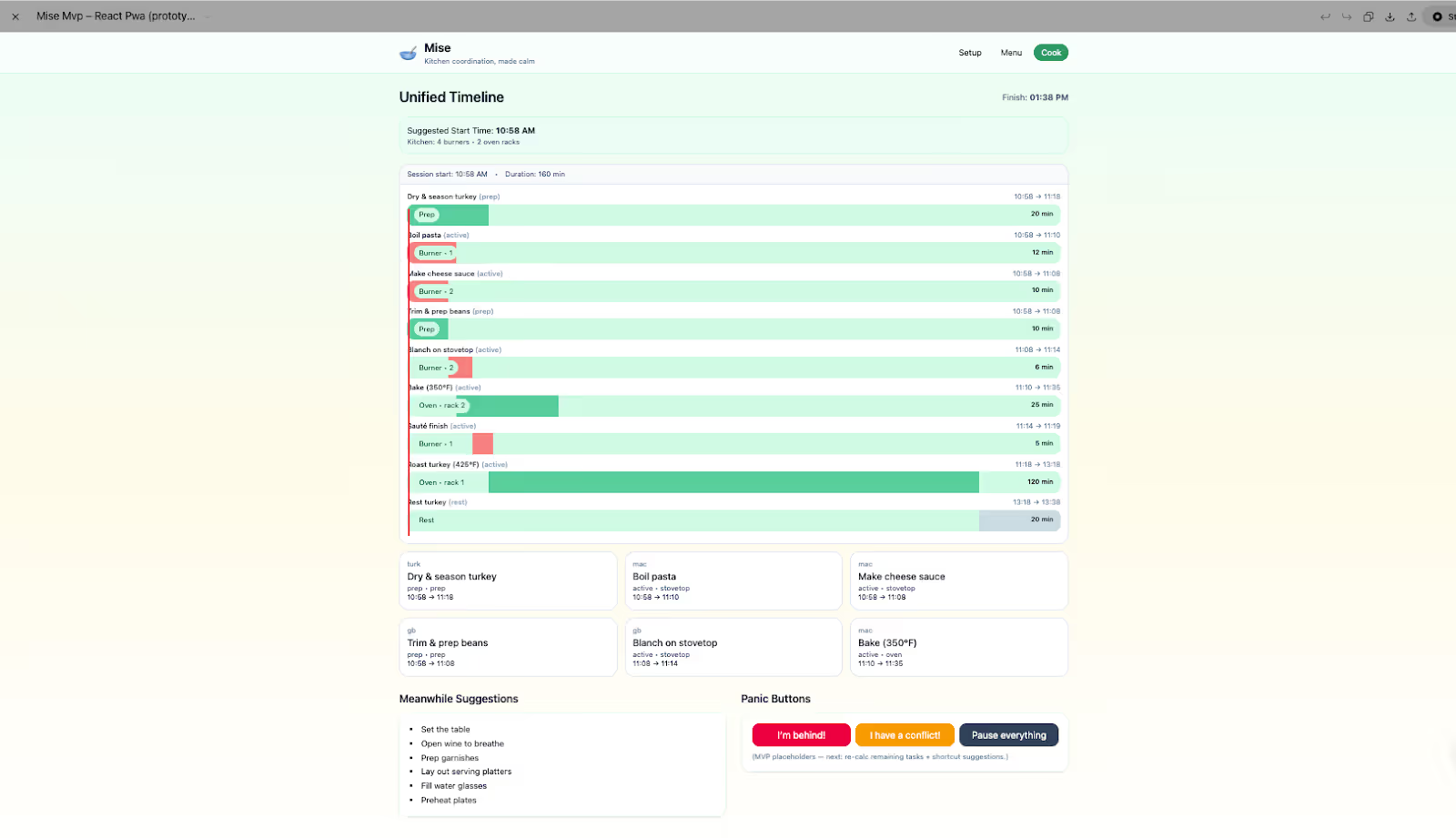

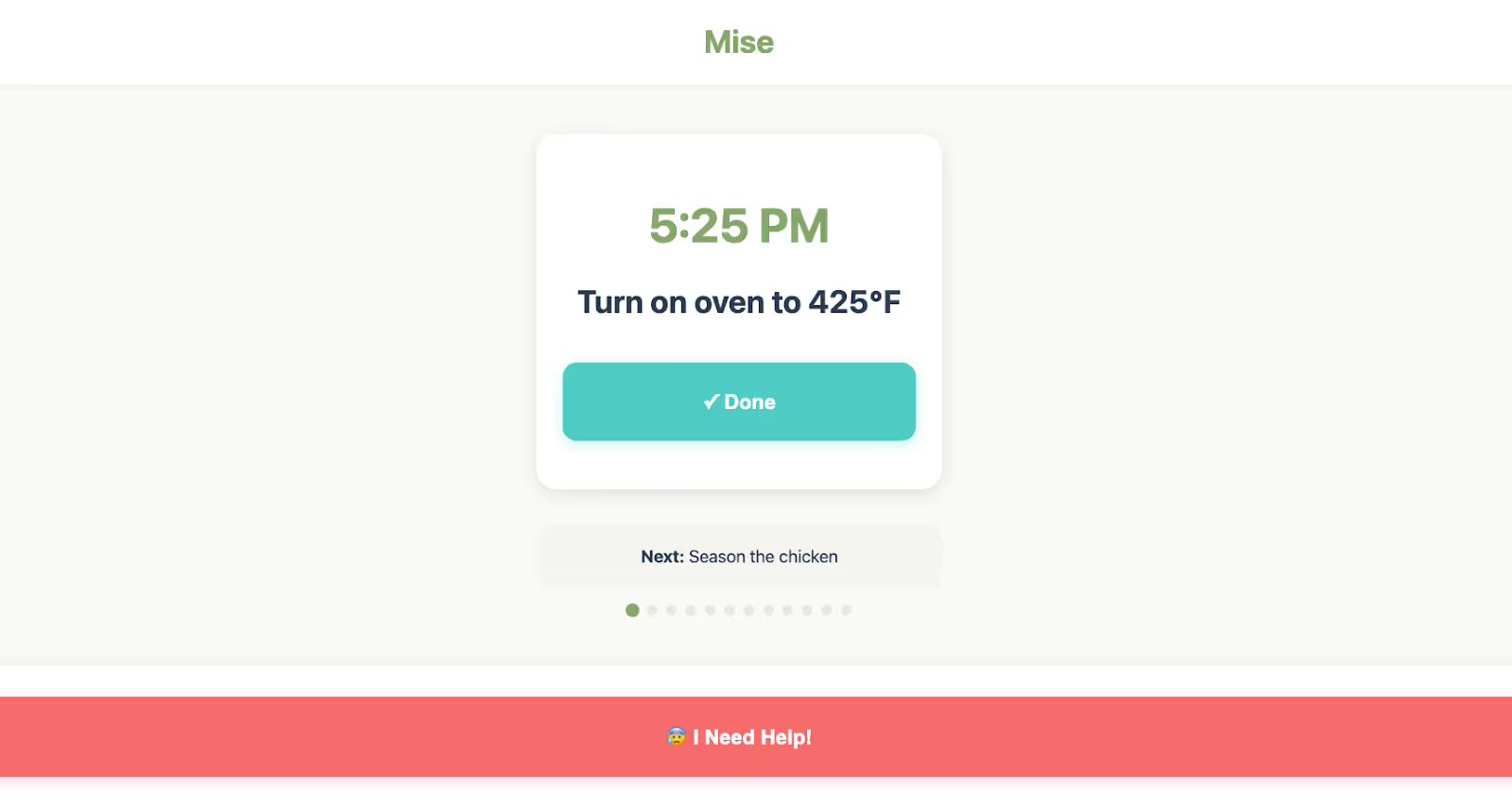

We tested out ChatGPT and Claude’s capabilities for an app that can help you coordinate the cooking of multiple dishes at the same time. Here’s how they did:

ChatGPT actually excelled at understanding the overall goal of the app, providing screens for kitchen setup, menu inputs, and a Gantt chart displaying all of the recipe steps. Claude, on the other hand, had a much more pared down version that only allowed for pre-defined recipe input and a single step per screen.

TL;DR: The jury is still out on whether this is the best coding model of the foundational LLMs – some are saying Claude Code is still the leader. But it does reposition OpenAI as a legitimate contender with Claude for coding and prototyping tasks – and we’ve gotten good V1 prototypes with GPT-5 already.

Not all of these capabilities are going to matter for the day-to-day tasks of your team, so here’s what we think you should take away:

1. Model consolidation solves a big pain point. Of all the features released this week, this will have the biggest impact on your work. If you've ever felt confused about coding vs. reasoning vs. base models, you can now focus on using AI as a thought partner and stop worrying about if you have the best model for the job.

While this is still a far cry from AGI, GPT-5 shows AI is becoming smart enough to leverage itself. You no longer need to be an expert at the differences in the model outputs to get maximum value from it.

2. Hallucinations are less of an excuse. 30% of the workforce limits their AI use because they’re worried about hallucinations, based on our recent data. This is becoming much less of a problem with AI. And while you should still be doing your own analysis and bringing your own POV, these updates make it easier to trust AI’s contributions.

3. LLM centralization is arriving. With this release, OpenAI made strides toward closing the gap on its competitors' advantages – incorporating the larger context windows, superior coding capabilities, various personas, and multimodal reasoning.

Previously, you might have jumped from ChatGPT to Gemini for its 1 million token context window or to Claude for its superior coding and prototyping. But now similar capabilities are all available in ChatGPT. This heralds a future where we all may be picking one platform to work in exclusively.

4. This is not the time to bounce off. As with every major AI release, competitors are going to respond quickly. The real excitement isn't just what GPT-5 can do today – it's what this will push the entire industry to deliver tomorrow. So if you’re getting fatigued by model release after model release, this is the time to wake up.