60% of the knowledge workforce limits their AI use because they’re worried about data privacy and security, according to our latest AI Proficiency Report. But over the last 18 months, many LLMs have added more transparent data usage policies, options for users to opt out of data collection, and the ability to disable storage of their chat history.

Your personal information is safer than you may think, if you choose the right tool. Here’s your complete guide to picking the LLM that matches your privacy expectations.

Here’s the TL;DR

Enterprise-grade solutions like ChatGPT Enterprise or Microsoft Copilot for Business have the most robust data governance policies, clear guidelines for data usage, and guarantee your data won't be used for model training.

But for individual users, ChatGPT and Perplexity offer the most transparent and controllable privacy settings. Both platforms provide explicit toggles to enable or disable AI training on your data. While disabling these features might limit access to certain capabilities (like beta features), you get maximum control over your data usage.

What about all the other platforms?

Other platforms fall into one of two categories:

- Built-in privacy controls with limited user customization

- Complex or unclear data policies

Claude and Microsoft Copilot (consumer version) have clear but preset privacy controls. Claude doesn't use your data for training by default, except when you provide explicit feedback. Copilot strips personal identifiers from training data and offers clear personalization and training controls.

Gemini has a more complex data policy. While it offers some controls through Google Account settings, your conversations may still be reviewed by human evaluators and used to improve their models. The retention and usage policies aren't as straightforward as other platforms.

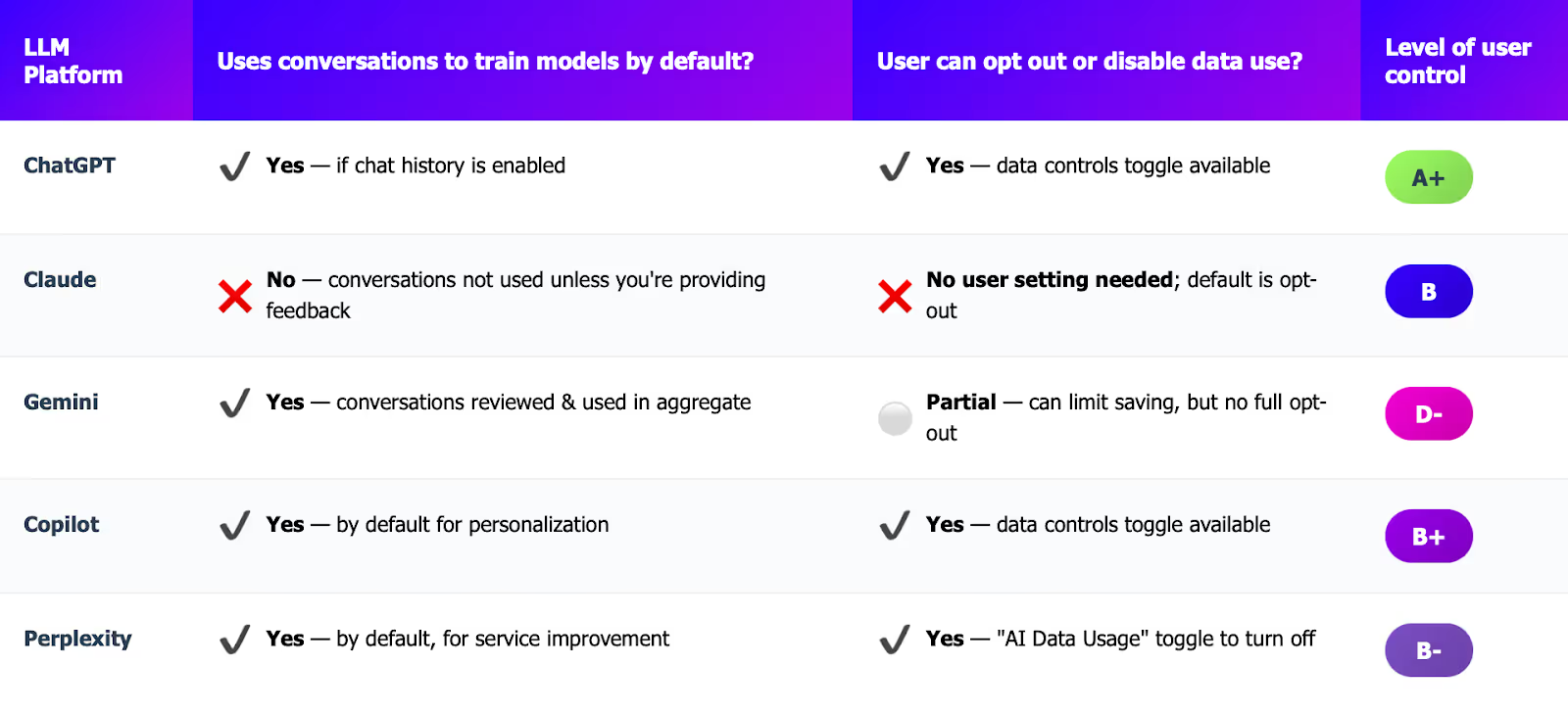

Below is a side-by-side comparison and more details on each platform.

A quick side-by-side of consumer LLMs

Updated as of publication (May 2025)

✔️ - Yes, the platform does this

❌ - No, the platform does not do this

⚪ - Unclear or unknown, the platform does not have a clear policy.

The full view of privacy controls in consumer models

ChatGPT by OpenAI

How to enable privacy controls: OpenAI provides an account-wide data control for ChatGPT. In Settings → Data Controls, you can turn off “Improve the model for everyone”. This setting syncs across your devices when logged in, so you only need to set it once. There is also a Temporary Chat mode for truly short-lived conversations.

How the privacy controls work:

- Default training policy: By default, OpenAI may use your prompts and ChatGPT responses to train and improve its models. If you disable the “Improve the model” setting, your new conversations will not be used in training.

- Chat history: When model training is disabled, your chats still appear in your history (so you can continue the conversation later), but OpenAI flags them internally to exclude them from training datasets.

- Temporary chats: If you use Temporary Chats, those conversations are not saved to your history at all and aren’t used for training. Temporary chats are stored on OpenAI’s servers only for 30 days (for abuse monitoring) and then permanently deleted.

- Retention: OpenAI retains normal chat history on its servers indefinitely unless you delete it. If you delete chats (or your account), the data is removed from OpenAI’s systems after 30 days (barring legal requirements). With Temporary Chat or disabled history, chats are automatically deleted after 30 days.

Considerations for protecting your data:

- Enterprise plans: ChatGPT Enterprise and Business plans automatically do not use your prompts or outputs for training – no action required. If you’re in an organization that uses these, your data stays with you by default.

For more information: see OpenAI’s Data Controls FAQ and Enterprise Privacy page.

Claude by Anthropic

How to enable privacy controls: Anthropic’s consumer Claude AI comes with privacy protections “out of the box,” and there are no user-configurable switches for data sharing. In other words, you cannot manually toggle any training opt-out – because Claude is already designed not to use your data for model training by default.

How the privacy controls work:

- No training without consent: Anthropic does not use your prompts or Claude’s responses to train its generative models (like Claude) unless you explicitly opt in. Explicit opt-in is given through feedback mechanisms (e.g., thumbs up/down) or by joining specific programs like the Development Partner Program.

- Standard retention: When you delete a conversation in Claude, user inputs and outputs are automatically deleted from Anthropic’s backend systems within 30 days of receipt or generation.

- Enterprise clients: For commercial products like Claude for Work or the Anthropic API, the same 30-day deletion policy applies, unless a different agreement is in place.

Considerations for protecting your data:

- Feedback usage: If you provide explicit feedback (e.g., thumbs up/down), Anthropic may use that specific interaction to improve Claude, including for training purposes.

- Trust & safety reviews: Conversations flagged for violating Anthropic’s Usage Policy may be reviewed and used to enhance safety systems.

For more information: see Anthropic’s Privacy and Legal FAQ and Acceptable Use Policy.

Gemini by Google

How to enable privacy controls: Google’s Gemini AI (which has replaced the Bard brand with an upgraded model) provides several privacy and data controls integrated with your Google Account. Key settings include:

- Gemini Apps Activity: This is similar to Web & App Activity (how Google tracks your browsing history), but for Gemini. By default, Google saves your Gemini conversation history to your account for 18 months. You can adjust the retention period to 3 or 36 months, or turn it off entirely in your Google Activity controls. To manage this, visit My Activity > Gemini Apps Activity and set your preference (including “Auto-delete” options).

- Personalization toggle: Google now lets you decide if your broader Google account data (like your search history or other app usage) can be used to personalize Gemini’s responses. This is an opt-in setting – for example, you might allow Gemini to use your Google Search history to give more tailored answers. It’s accessible via the Gemini Privacy Hub or your account settings.

- Data download or deletion: Through Google’s privacy tools, you can export your Gemini conversations (using Google Takeout) and delete conversation history from your account. Deleting your Gemini chats from My Activity will remove them from your account (though Google may still retain them briefly as described below).

How the privacy controls work:

- Data usage: By default, Google will use your Gemini conversations to improve its AI models and services. A portion of chats (with personal identifiers removed) are reviewed by human evaluators to rate quality, safety, and accuracy. These reviews help refine the model’s responses (via fine-tuning or reinforcement learning). In Google’s terms, your chats help “ensure and improve the performance” of their services.

- Human review and retention: If your conversation is selected for human review (Google says this may include third-party contractors trained for this task), that conversation is stored separately from your Google Account and retained for up to 3 years. Even if you delete your activity or turn off saving, reviewed chats are kept (anonymously) for ongoing improvement and safety training. Non-reviewed chats that are saved to your account follow your chosen retention schedule (e.g. auto-delete after 3 months if you set that).

Considerations for protecting your data:

- Don’t input confidential info: Google explicitly warns users not to enter any sensitive or confidential information into Gemini. Because conversations might be seen by human reviewers and used to improve AI, you should treat it like a semi-public forum. Google’s interface and Privacy Hub repeat this guidance.

- Ads and personalization: As of now, Google says Gemini conversations are not used to target ads. (For instance, chatting about travel plans in Gemini won’t immediately lead to travel ads on Google – at least not currently.) However, they have left the door open to integrate Gemini with their ad ecosystem in the future, and promise to inform users if that changes. Keep in mind that other Google services (Search, etc.) might use your interactions for ads if you haven’t disabled Web & App Activity.

- Gemini for Google Workspace: This plan gets enterprise-grade security – submissions aren't used to train models and are never reviewed by humans, plus interactions stay within your organization’s workspace. No content uploaded is used for model training outside of your domain without permission.

For more information: see Gemini Apps Privacy Hub and Gemini Privacy & Safety Settings.

Perplexity

How to enable privacy controls: Perplexity provides a clear setting to control data usage. Go to Your Profile → Settings → AI Data Usage. Here you can toggle off the option that allows Perplexity to use your data to improve their models. This applies to both the free and Pro versions of Perplexity, as well as their mobile apps. By default, this toggle may be on (meaning data is used for training), so privacy-conscious users should turn it off.

How the privacy controls work:

- Opt-out effect: With AI Data Usage turned off, Perplexity will not use any questions you ask or answers you receive to train or enhance their AI models.

- No training: Your interactions won’t be used in fine-tuning or to improve answer quality. Essentially, your data stays out of their learning loop. (Perplexity confirmed this policy for both consumer accounts and their API offering – data won’t be used for training by default, especially if you disable it.)

- Data retention: Perplexity retains your account information and past queries for as long as your account is active. If you delete your account, they will delete your personal data from their servers within 30 days.

Considerations for protecting your data:

- Use incognito mode for searches and chats: Click your avatar → Incognito; those searches are never stored and Memory is automatically off.

- Clear or turn off Memory: In Settings → Personalize → Memory you can toggle it off, delete specific memories, or “Clear all”. Deleted memories vanish from answers, though Perplexity keeps a safety log for ≤30 days.

For more information: see Perplexity’s Privacy Policy page.

Microsoft Copilot

How to enable privacy controls: Microsoft provides two experiences: one for consumers and one for businesses and enterprises. For consumers, there are clear settings: Go to your Profile → Click your name and email → Select Privacy. From here, you can toggle off training on text and voice, while managing memory and personalization features. If you’re using Copilot at work or considering buying it for your business or enterprise, you’ll need to explore the data use and privacy controls for the non-consumer versions of Copilot.

How the privacy controls work:

- Training policies vary by account type: Enterprise/commercial accounts are protected by default - Microsoft doesn't use their data to train AI models. For consumer accounts, Microsoft plans to use interaction data for training (with personal identifiers removed) but will offer an opt-out option. Enterprise data remains exempt from training.

- Personalization and memory: Copilot can personalize its responses by learning your preferences if you allow it. When signed in as a consumer, you have a dedicated setting to let Copilot “personalize your experience”. If personalization is on (the default), Copilot will build a short-term memory of things you’ve told it – for example, it might remember that you prefer casual tone or that you “like science fiction movies.” This helps tailor future answers to you. You can disable personalization at any time, and Copilot will forget any saved details about you.

- Conversation history & retention: For convenience, consumer Copilot provides a conversation history so you can review or continue past chats. By default, your Copilot chat history is retained for up to 18 months (1.5 years) in your account. This history is private to you and used to maintain context across sessions (for example, the Copilot app or Bing Chat can recall what you asked earlier). You have full control over this data: you can delete individual chat entries or your entire conversation history at any time.

Considerations for protecting your data:

- Personalization controls: If you disable personalization, Copilot will still keep past conversations for you to view, but it won’t use them to tailor new replies. And if you prefer not to have any record, you can manually clear your chats (Microsoft’s interface makes it easy to delete history). In short, you decide how long Copilot remembers your past queries.

- Voice and input privacy: If you use voice with Copilot (for example, speaking to the Copilot app or Bing), your voice clips are handled the same way as text chats. Speech data is sent to Microsoft’s speech recognition service to convert it to text for Copilot. Voice transcripts end up in your conversation history, and you can opt out of letting those be used for training as well

- Enterprise Security: For businesses and organizations using Copilot, Microsoft takes a “your data remains your data” approach. Enterprise Copilot services are built with strict privacy guardrails so that company data and code stay protected. This is a firm guarantee in Microsoft’s terms: any data that your organization’s users feed into Microsoft 365 Copilot (documents, emails, chats, etc.) or that GitHub Copilot for Business sees from your codebase is excluded from improving Microsoft’s.

For more information: see Microsoft’s Copilot Privacy Controls page and for enterprise, Microsoft 365 Copilot privacy and security.