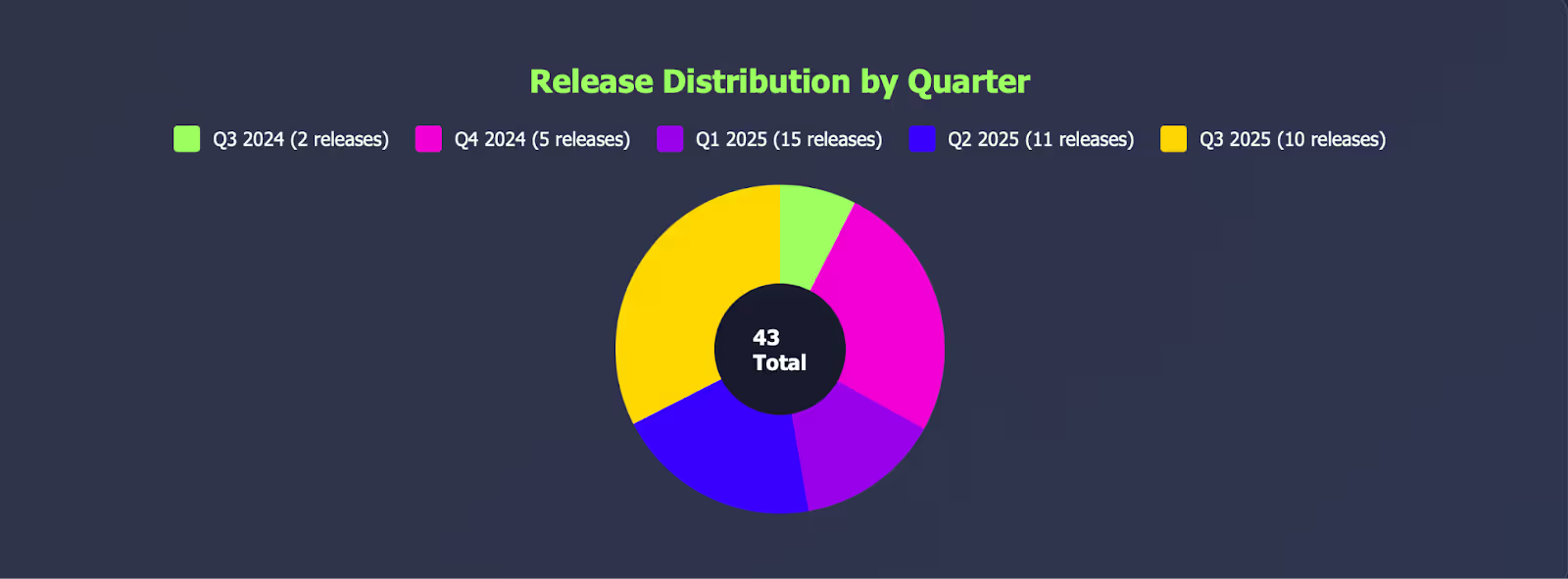

Over the last year, the big LLMs have been releasing newer, better, and smarter models at breakneck speed – 36 in the last 3 quarters alone, the latest being the release of GPT-5.

Knowledge workers have access to new AI features on a monthly basis. That’s great for a small subsection of AI nerds. But for most people, it’s not the win it sounds like. AI knowledge scores are dropping as LLM updates skyrocket, and even AI experts aren’t able to keep up.

While GPT-5’s improvements sound like a solution to this problem – without model switching, there’s no more need to keep pace with model releases – the workforce is still overwhelmed.

Knowledge workers don’t need more breathlessly-marketed model updates. They need support to actually use AI - whatever model that is. And that can only come from their leadership.

More models, more problems

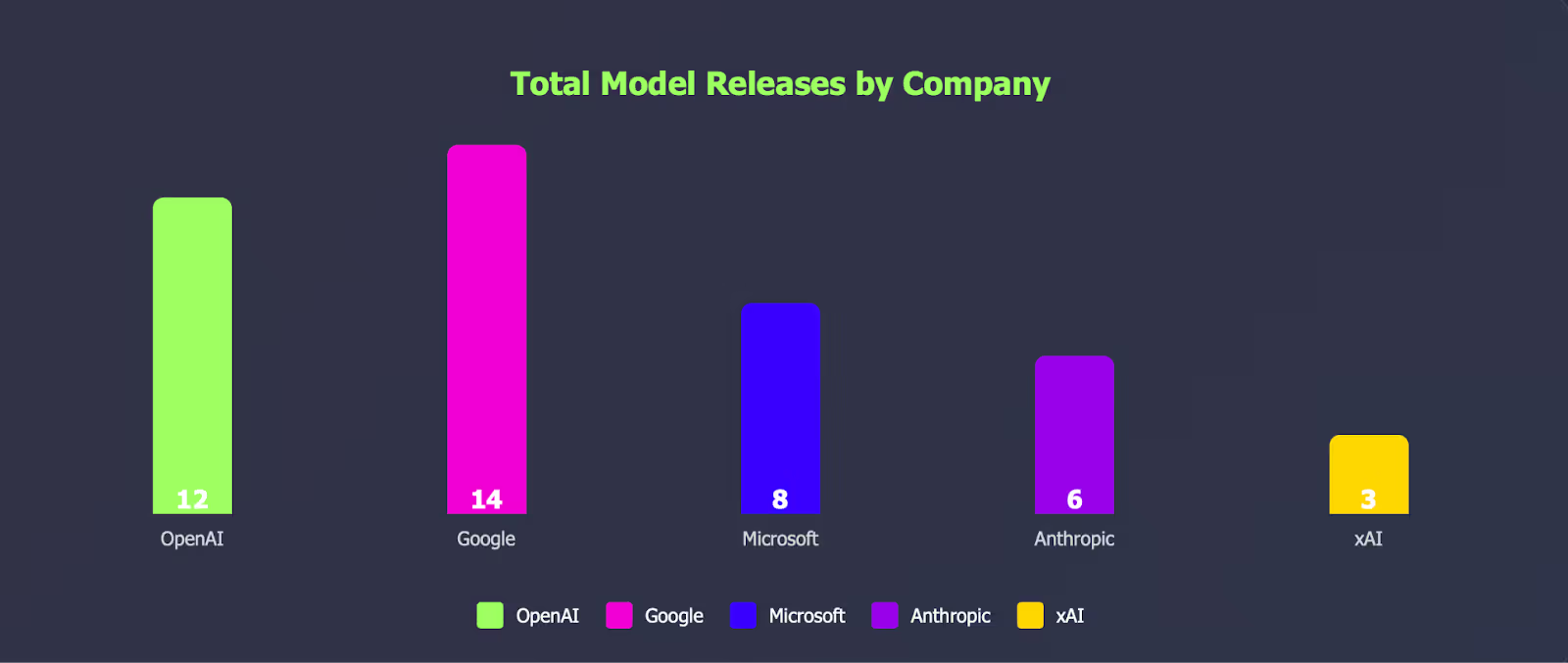

There have been 43 model releases from the 4 major LLMs (ChatGPT, Copilot, Gemini, and Claude) in the last 12 months.

Claude has 3 hybrid reasoning models and 2 non-reasoning models. Gemini has 4 reasoning models and 4 non-reasoning models. GPT-5 has consolidated its various models into one, but every other LLM’s reasoning models are separate, requiring users to understand the difference and choose between them.

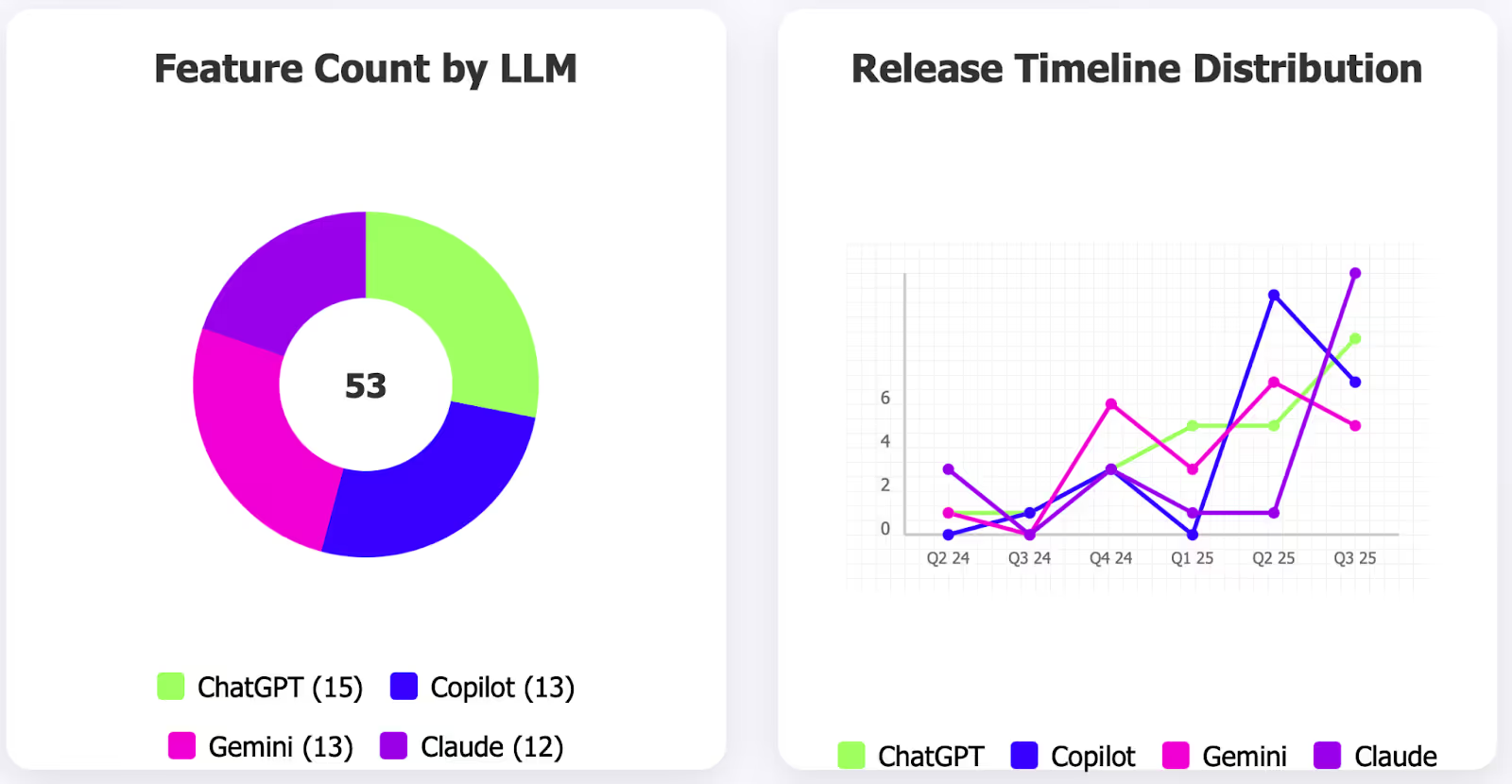

Beyond the models themselves, these LLMs had 53 new feature releases in the last year.

ChatGPT and Gemini both released Deep Research features for in-depth exploration of complex topics. Claude introduced Artifacts for AI-assisted content drafting. ChatGPT released Canvas – a persistent workspace for writing, coding, and collaborating with AI in real-time.

These are all highly valuable ways to work with AI, if you know how to use them – or frankly, if you even know they exist. But it’s difficult for the average knowledge worker to keep up with multiple new features across multiple LLMs every month, let alone learn to use them before the next feature launches.

The cost of this progress

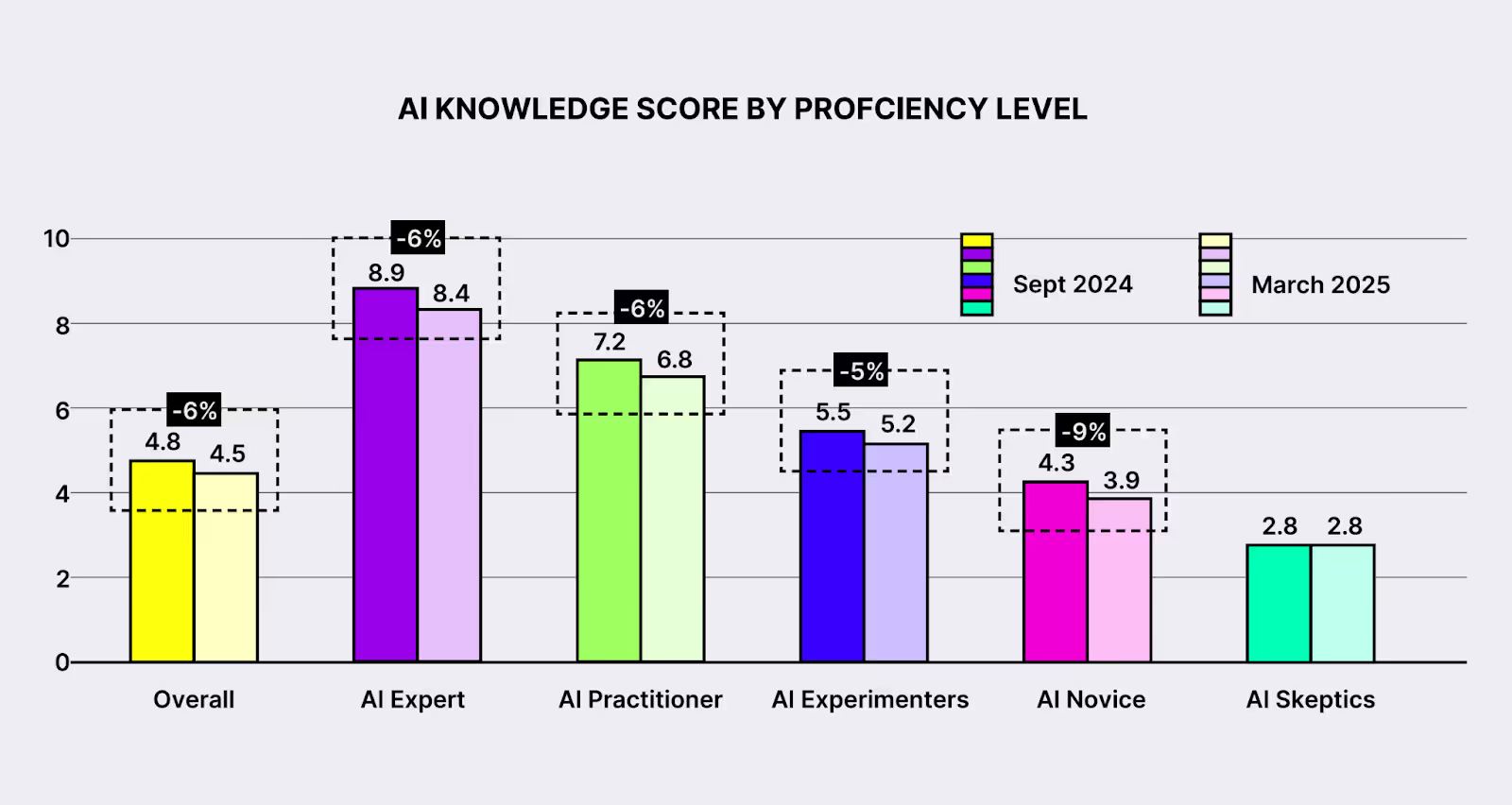

Our most recent AI Proficiency Report found that over the last six months, AI knowledge scores have dropped or stagnated across the board.

Even “AI experts” are struggling to keep their AI knowledge up to date, and the average knowledge worker scores a 4.5 out of 10 in AI knowledge.

28% of the workforce admit that they actively limit their AI use because they don’t know how to use it, and 27% said they're overwhelmed by AI.

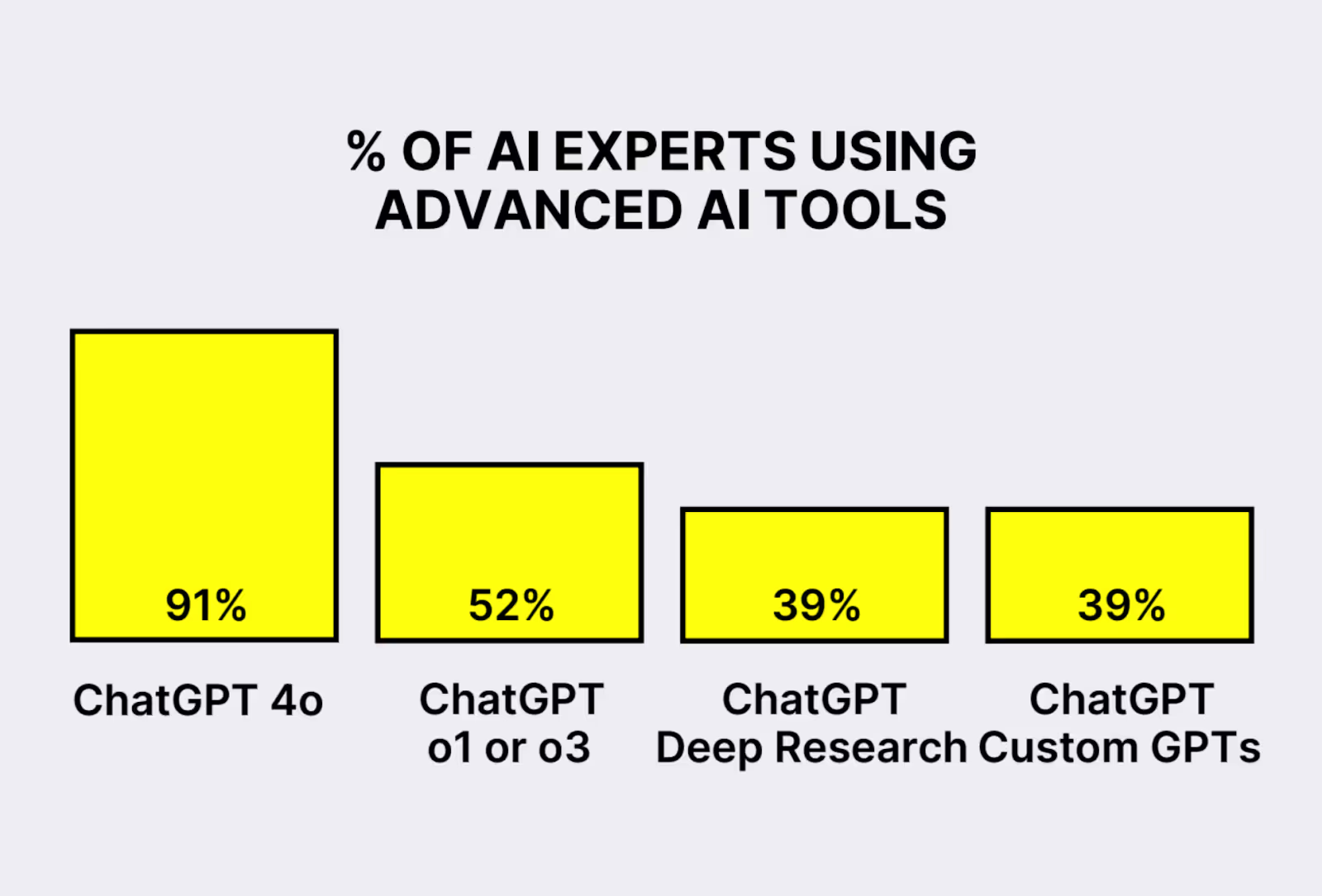

And the experts aren’t immune. Most haven’t leveraged any of the advanced capabilities their tools have to offer.

The vast majority were using ChatGPT’s default model at the time of survey. Only about half had tried a reasoning model. Less than 40% had deployed a custom GPT.

How leaders can help

Knowledge workers don’t need more feature options. They need one foothold to start their climb, and they’re looking to leadership to provide it. Here’s how you can do that:

Step 1: Pick one tool that you trust

Roll out one LLM that all employees should be using. We would recommend ChatGPT or Claude. Your team’s focus should be on becoming expert-level users at one general-purpose LLM.

Since many LLMs have similar features and models, mastering one helps you pick up others much more quickly. This step is critical for building a foundation of AI use that your team can build on over time.

Step 2: Go deep on use cases

Even great prompters struggle to find great use cases with AI. So once your team is comfortable with prompting your LLM, you need to start helping your team find use cases and pilot AI-augmented workflows. (We have a whole guide on that here.) This is where tools like Custom GPTs will become useful and necessary.

The most important part of this step is focusing on friction areas. This is your guaranteed “aha!” moment. Help them select their highest manpower (manual and time intensive) or highest brainpower (requires deep strategic thinking) workflow to augment. Then identify the tasks AI can take over or augment within that workflow (our AI Workflow Audit Worksheet is great for this).

Step 3: Grow your champions

You will have a subset of people who naturally test out every new AI feature on the market. Those are your champions, and they’ll be helpful in leading your team through AI developments as they arise.

On our team, we have several people who give regular updates on the latest AI models, whether they work, what their problems are, etc. The key is to identify these people (via a survey, champions program sign-up, or by talking to managers) and give them support and time to investigate AI and report back.

The big takeaway: Throwing a robust and ever-growing tool at a group of people with already full plates is a recipe for a failed deployment. Instead:

- Keep it simple – commit to one one AI tool that everyone can get good at using

- Make use cases your goal – every employee should have AI-augmented workflows they use daily and they should feel supported in finding them

- Put the “AI nerds” in charge of AI discovery – but don’t expect everyone to get this deep and granular with it

For the full state of AI proficiency in the workforce, download our latest AI Proficiency Report. We also have a full set of guides on nailing your AI deployment (including training and launching pilots). And if you need more help, we’re always available at teams@sectionai.com.